How to Create Inclusive AI Images: A Guide to Bias-Free Prompting

AI images are everywhere, but without the right prompts, they often leave people out. Learn how to move past stereotypes and create visuals that reflect a diverse, inclusive world.

AI image generation tools are fast and creative, but without thoughtful prompts, they often reinforce stereotypes. This article unpacks how to write image prompts that produce more inclusive, representative visuals.

AI image generators are booming, but inclusivity hasn’t caught up

AI-generated images are exploding in popularity, with text-to-image algorithms generating an estimated 150 billion images yearly. These fast, creative, cost-effective tools are a go-to solution for everything from e-learning training and internal comms to business presentations and social campaigns.

But there’s a catch.

Ask an artificial intelligence tool to generate a “person,” and the result often reflects the same narrow profile: white, male, slim, young, able-bodied, and conventionally attractive. A sanitized, one-size-fits-all version of humanity—regardless of whether that’s what you wanted.

These default outputs are harmful. They erase people, reinforce biases, and exclude audiences. Your workforce and your customers deserve better.

The good news is that with a few thoughtful techniques, you can generate images that respectfully and accurately reflect the full spectrum of human experience. This article illustrates how.

Key Takeaways

- Artificial intelligence images often reinforce bias because they’re trained on unbalanced data, resulting in narrow, stereotypical representations.

- Inclusive prompt engineering can interrupt biased AI defaults by intentionally shaping how people are described and depicted.

- You can prompt for more inclusive results by describing identities, using respectful language, prompting for diverse representation, and adding extra context.

Why AI images are often biased

Most AI image generators are trained on massive datasets scraped from the internet. While that can seem comprehensive, the reality is far from balanced. The images available online—and their associated captions and metadata—tend to overrepresent certain demographics while underrepresenting or stereotyping others.

That skew gets baked into the AI model. So, when a prompt is vague, the AI fills in the gaps—often defaulting to narrow representations that amplify stereotypes. Doctors, CEOs, and lawyers often appear as white men, while caregivers are usually women. Service workers are more likely to show up with darker skin, and people with disabilities are rarely represented at all.

“People learn from seeing or not seeing themselves that maybe they don’t belong.”

—Heather Hiles, chair of Black Girls Code

Shape the artificial intelligence output with inclusive image prompts

AI bias is discouraging, but we’re not stuck with biased results. By refining how we describe people and contexts, we can interrupt the baked-in defaults and guide AI toward more thoughtful, inclusive outcomes.

That’s the idea behind inclusive prompt engineering: writing image prompts that account for identity, context, and representation so that the AI model doesn’t make assumptions for us.

How to approach inclusion in your image prompts

Before writing your prompts, it helps to get clear on what inclusion means in this context. Cramming every identity into a single image or trying to reach a specific diversity quota can feel forced, or even tokenizing. And it often misses the fact that many aspects of identity, like disability or religion, aren’t always visible.

Instead, focus on avoiding harm and making thoughtful and respectful choices. Inclusive images should feel real and relatable, not intrusive, forced, or stereotyped.

How to write prompts that help AI generate images without bias

Generating diverse, inclusive images requires being specific, thoughtful, and intentional with your language. These prompting techniques can help you create imagery that reflects reality.

Lay the groundwork

Every AI image prompt benefits from a solid foundation. Clarity, context, and specificity help you get results that match your intent:

- Be specific about the format and style. To shape the aesthetic, include terms like digital illustration, watercolor, 3D render, editorial style, or cinematic photo.

- Match the tone to your audience. Should the image feel serious, playful, or peaceful? Are you creating a formal report or a friendly course? The mood you set should align with your message and the people you’re aiming to reach.

Specify visible identity details

If you don’t describe the people in your image, the AI model will decide for you—and chances are, it’ll default to narrow and stereotypical representations. You don’t have to cover everything, but the more specific you are, the more likely you are to get inclusive results.

Consider the following visible diversity dimensions:

- Age. Prompt for a mix of age groups and counter common stereotypes—especially in fast-paced or tech-driven industries, where younger people are often overrepresented. For example, you might ask for older adults in tech-savvy, active, or leadership roles.

- Race and ethnicity. Be intentional about representing a range of skin tones, racial and ethnic backgrounds, and cultural identities. Prompt for people from specific communities or request multicultural groups. To counter bias and stereotypes, show people of color—and others from historically underrepresented groups—in leadership, medical, educational, or executive roles.

- Gender. Represent people of all gender identities, including non-binary, women, men, and gender-diverse individuals. Challenge stereotypes to reflect the full spectrum of how people live and work. For example, you might prompt for women in construction or men in caregiving professions.

- Body size. Challenge appearance-based stereotypes by including larger-bodied people confidently leading presentations, practicing yoga, or modeling fashion. Aim for body diversity across roles and settings—not just in health or lifestyle imagery.

- Visible disability. Represent people with visible disabilities in empowered roles and avoid turning disability into a symbol or trope. Include a range of assistive devices—not just wheelchairs, but also white canes, prosthetics, hearing aids, guide dogs, and more. Aim for scenes that reflect dignity, agency, and everyday life.

Here’s a simple identity shift that changes the outcome:

|

|

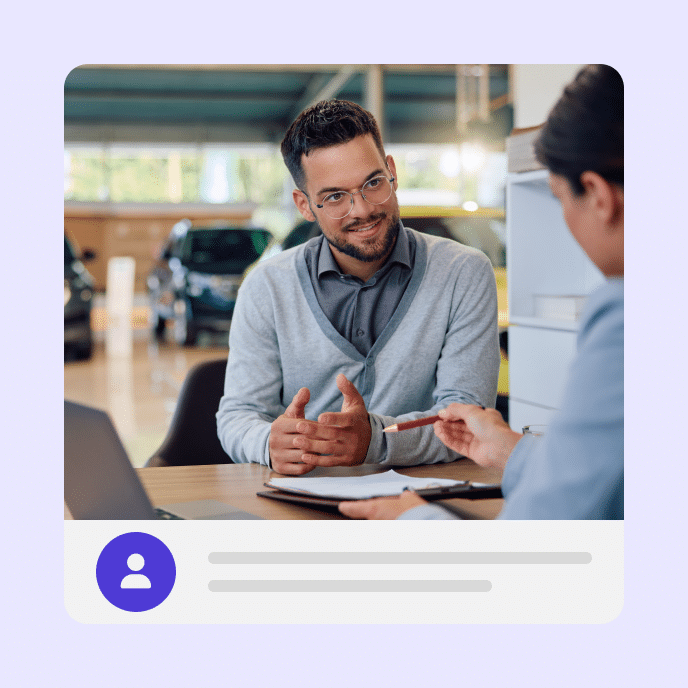

| Before: Professionals in an office business meeting | After: Professionals having a friendly team meeting. The group includes an Asian woman in her 40s, a Black man in his 30s, a larger-bodied woman in her 50s, and a man with salt-and-pepper hair. |

Specify invisible identity details

Many aspects of identity don’t have clear visual indicators. For example:

- Invisible disability. Includes physical, mental, cognitive, sensory, or emotional conditions that may not be immediately apparent.

- Sexual orientation. An internal identity that’s not visible unless disclosed.

- Religious affiliation. Sometimes expressed through attire or objects, but often private or internal.

- Nationality or cultural heritage. Often reflected in language, behavior, or tradition, not appearance.

- Neurodiversity. Encompasses a broad range of neurological differences like ADHD, autism,Tourette’s syndrome, and dyslexia.

- Socioeconomic status. Nearly always invisible. While sometimes inferred through setting, occupation, or clothing, these cues can easily introduce bias.

These identities deserve representation, but visualizing them too literally can lead to clichés and reduce complex experiences to a single trope. Instead, rely on context. Use these tips to guide your prompts:

- Ground scenes in reality. Does the context make sense? Is it respectful, or does it feel forced or tokenized? For example, in the case of socioeconomic status, context might mean depicting everyday life instead of luxury. Dining out could be a casual café, and clothing could reflect accessible, non-branded styles.

- Describe what’s observable. Focus on what’s visible in the scene rather than assuming internal identities. For example, say, “two men picking up a child from school” rather than “two gay men picking up their child.”

- Accept when it can’t be shown. Some identities aren’t visually representable—and that’s OK. Representation doesn’t always require visibility.

Use respectful, people-first language

Outdated, loaded, or ableist terms often result in imagery that feels just as exclusionary. Instead, choose people-first and identity-affirming image prompts. That means saying “wheelchair user” instead of “wheelchair-bound,” or “larger-bodied person” instead of “overweight person.” These small changes to your image prompt can make a big difference in what the model generates.

Here’s an image prompt that avoids ableist language and creates a more respectful output:

|

|

| Before: A wheelchair-bound woman chatting with co-workers in a modern office | After: A woman who uses a wheelchair chatting with co-workers in a contemporary office |

Add cultural, social, and emotional context

The setting, activity, and emotional tone you include in a prompt all shape how the AI model represents people and their experiences.

Where someone is, what they’re doing, and how they’re shown can shift the meaning of an image entirely. For example, a woman wearing a qipao (traditional Chinese dress) at a party is completely different from a woman wearing a qipao in the office.

Here’s how a few extra details can completely reshape an image:

|

|

| Before: A man wearing a manimamah | After: A joyful man wearing a manimamah laughing with friends while sharing snacks at a sunny outdoor party |

Prompt for diverse representation

Even when you don’t have a specific identity in mind, you can still guide AI toward more inclusive results by prompting for diverse representation. Simple terms like “diverse,” “multicultural,” “inclusive,” or “representing a range of backgrounds” help interrupt one-dimensional defaults.

This is especially useful for group scenes, workplace images, or general-purpose visuals where showing a mix of people matters.

Here’s a small change that invites more balance into the output:

|

|

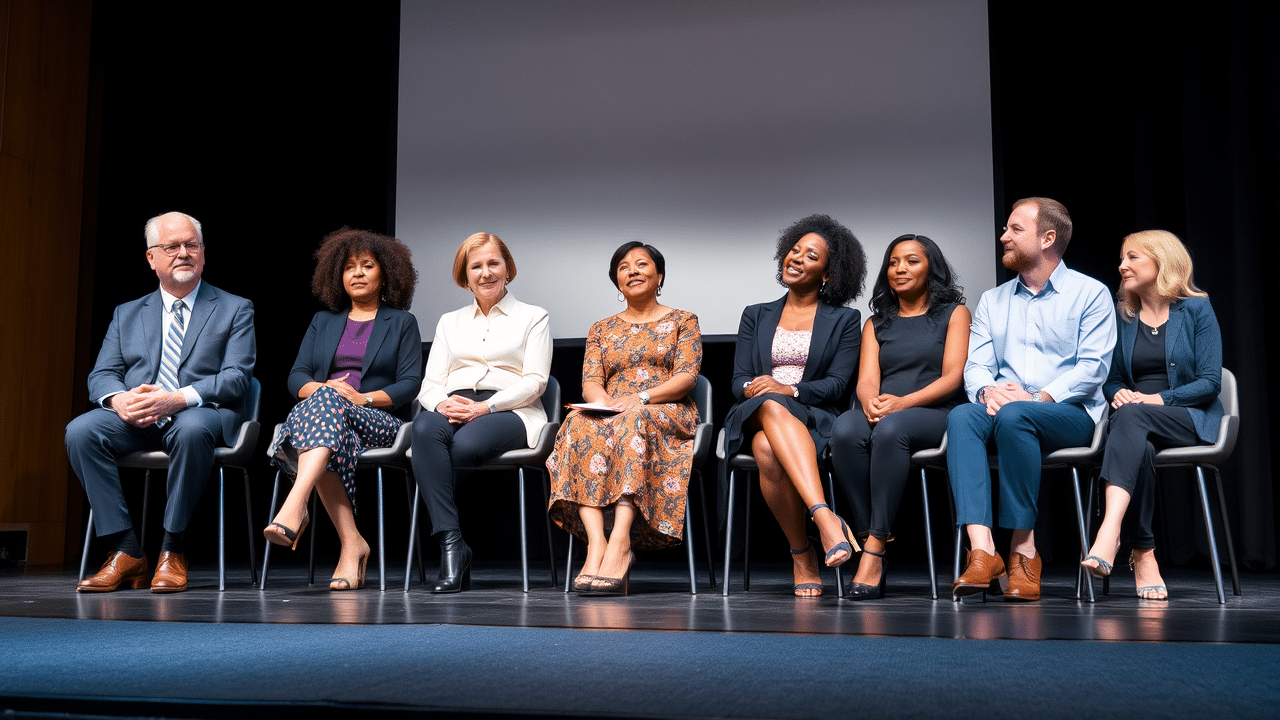

| Before: A business panel discussion on stage | After: A multicultural, age-diverse, and gender-diverse professional business panel discussion on stage |

Inclusive AI imagery starts with inclusive wording

Bias is an AI default. When generated images misrepresent or stereotype people, the solution isn’t to blame the tool. The responsibility lies with us. AI image generators are only as inclusive as the prompts we give them. When we rely on defaults, we get default results that leave people out.

Thankfully, inclusive imagery is possible—your AI tool just needs a little extra guidance. You can direct it to create more representative visuals by writing prompts that describe identities, use respectful language, invite diversity, and ground your scene with context. Inclusive representation takes deliberate, informed choices. It means educating ourselves, challenging assumptions, and crafting visuals that reflect our audiences, counter harmful norms, and uphold dignity.

And don’t forget the follow-through: Inclusive visuals should be paired with accessible descriptions. Thoughtful alt text invites everyone into the story. Focus on what’s relevant, describe identity with care, and center the context.

Want to continue sharpening your skills? Check out our guide to writing great AI art prompts.

Author’s note: All images in this post were created using AI Assistant, with “before” examples showing default outputs and “after” images reflecting inclusive prompt techniques.

You may also like

Sales Enablement vs. Sales Training: Understanding Key Differences

Discover how sales enablement and sales training differ, when to use each, and how combining them helps sales teams sell more effectively at scale.